Driving Digital Transformation “Digital, Digitization, Digital, Digital, Digital Transformation. There, I’ve hit my mandatory quota of 5 digital mentions for my presentation, now we can get to something interesting.†That was my opening line at a large data center and cloud conference in Rome. It wasn’t the one I’d planned,…

Category: Concepts

IT Needs its Gates and Jobs

Scour the data sheets and marketing of the best business technology hardware and software and you will see complexity. You will see references to ports, protocols, abstractions, management models, object-oriented and non-object-oriented practices, etc. Hand that data sheet to a highly-intelligent, well-educated lay-person and you will get a blank stare….

What Product Management, Sales, and Job Candidates Have in Common

Pop quiz hot-shot. What do the following three people all have in common? A product manager responsible for defining a product, driving engineering, and taking that product to market. Anyone working a sales job for any product, in any place. A candidate applying for any job, or requesting a promotion/raise at any…

Negotiating Your Career

In this 35 minute video I provide some advice for building your career, putting a price on your value, and negotiating for salary/promotion. I’m having some issues with the frames display so the direct link may be better for you: https://youtu.be/ER5msIAx7do Related posts: My Career | My Rules The Art…

Cloudy with a 100% Chance of Cloud

I recently remembered that my site, and blog is Called Define the Cloud. That realization led me to understand that I should probably write a cloudy blog from time to time. The time is now. It’s 2018 and most, if not all of the early cloud predictions have proven to…

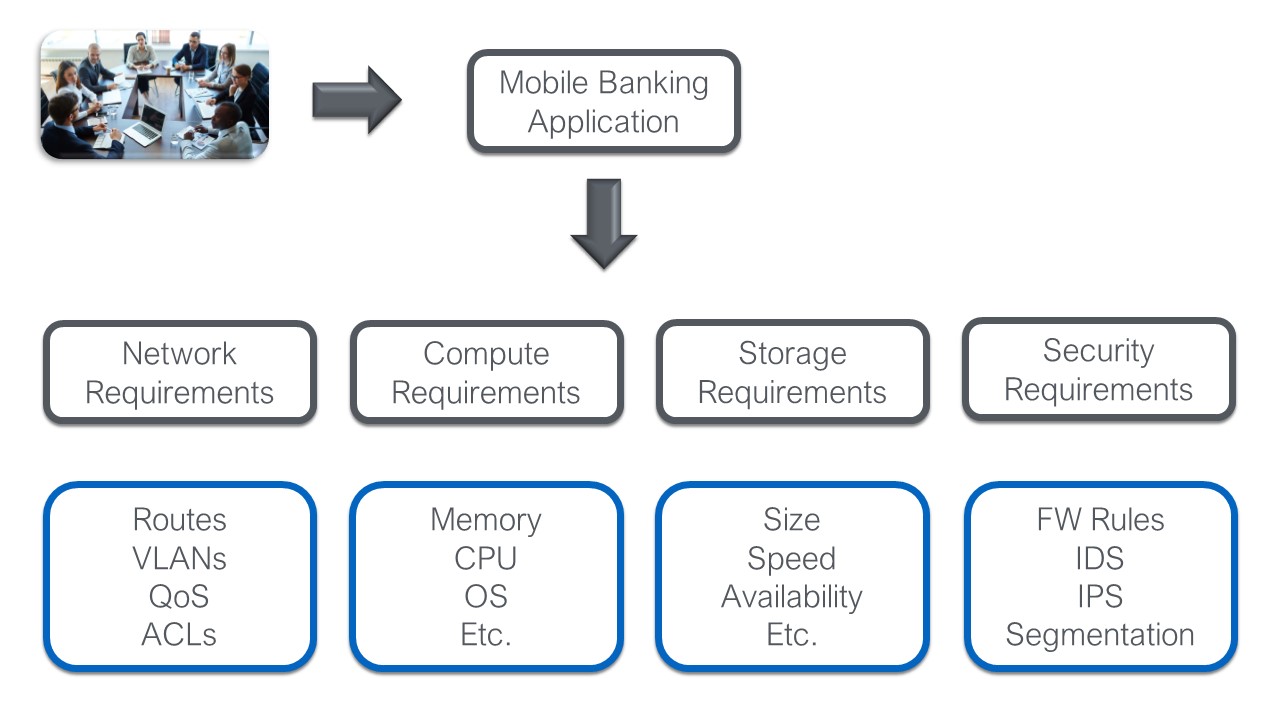

Intent all of the things: The Power of end-to-end Intent

The tech world is buzzing with talk of intent. Intent based this, intent driven that. Let’s take a look at intent, and where we as an industry want to go with it. First, and briefly, what’s intent? Intent is fairly simple if you let it be, it’s what you want from the…

Reassesing ‘Vendor Lock-In’

Lock in is an oft discussed consideration when making technology decisions. I tend to see it used more by vendors seeding Fear Uncertainty and Doubt (FUD), but I also see it in the native decision making processes of many of my customers. Let’s take a look at it, starting with…

We Live in a Multi-Cloud World: Here’s Why

It’s almost 2019 and there’s still a lot of chatter, specifically from hardware vendors, that ‘We’re moving to a multi-cloud world. This is highly erroneous. When you hear someone say things like that, what they mean is ‘we’re catching up to the rest of the world and trying to sell…

Your Technology Sunk Cost is KILLING you

I recently bought a Nest Hello to replace my perfectly good, near new, Ring Video Doorbell. The experience got me thinking about sunk cost in IT and how significantly it strangles the business and costs companies ridiculous amounts of money. When I first saw the Nest Hello, I had no…

Intent Driven Architecture Part III: Policy Assurance

Here I am finally getting around to the third part of my blog on Intent Driven Architectures, but hey, what’s a year between friends. If you missed or forgot parts I and II the links are below: Intent Driven Architectures: WTF is Intent Intent Driven Architectures Part II: Policy Analytics…