FlexFabric – Small Step, Right Direction

Note: I've added a couple of corrections below thanks to Stuart Miniman at Wikibon (http://wikibon.org/wiki/v/FCoE_Standards)Â See the comments for more.

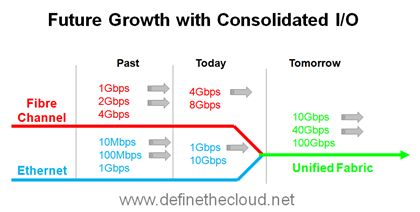

I’ve been digging a little more into the HP FlexFabric announcements in order to wrap my head around the benefits and positioning. I’m a big endorser of a single network for all applications, LAN, SAN, IPT, HPC, etc. and FCoE is my tool of choice for that right now. While I don’t see FCoE as the end goal, mainly due to limitations on any network use of SCSI which is the heart of FC, FCoE and iSCSI, I do see FCoE as the way to go for convergence today. FCoE provides a seamless migration path for customers with an investment in Fibre Channel infrastructure and runs alongside other current converged models such as iSCSI, NFS, HTTP, you name it. As such any vendor support for FCoE devices is a step in the right direction and provides options to customers looking to reduce infrastructure and cost.

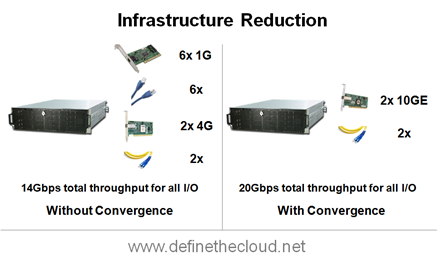

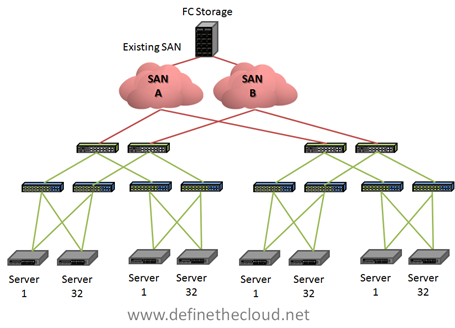

FCoE is quickly moving beyond the access layer where it has been available for two years now. That being said the access layer (server connections) is where it provides the strongest benefits for infrastructure consolidation, cabling reduction, and reduced power/cooling. A properly designed FCoE architecture provides a large reduction in overall components required for server I/O. Let’s take a look at a very simple example using standalone servers (rack mount or tower.)

In the diagram we see traditional Top-of-Rack (ToR) cabling on the left vs. FCoE ToR cabling on the right. This is for the access layer connections only. The infrastructure and cabling reduction is immediately apparent for server connectivity. 4 switches, 4 cables, 2-4 I/O cards reduced to 2, 2, and 2. This is assuming only 2 networking ports are being used which is not the case in many environments including virtualized servers. For servers connected using multiple 1GE ports the savings is even greater.

In the diagram we see traditional Top-of-Rack (ToR) cabling on the left vs. FCoE ToR cabling on the right. This is for the access layer connections only. The infrastructure and cabling reduction is immediately apparent for server connectivity. 4 switches, 4 cables, 2-4 I/O cards reduced to 2, 2, and 2. This is assuming only 2 networking ports are being used which is not the case in many environments including virtualized servers. For servers connected using multiple 1GE ports the savings is even greater.

Two major vendor options exist for this type of cabling today:

Brocade:

- Brocade 8000 – This is a 1RU ToR CEE/FCoE switch with 24x 10GE fixed ports and 8x 1/2/4/8G fixed FC ports. Supports directly connected FCoE servers.Â

- This can be purchased as an HP OEM product.

- Brocade FCoE 10-24 Blade – This is a blade for the Brocade DCX Fibre Channel chassis with 24x 10GE ports supporting CEE/FCoE. Supports directly connected FCoE servers.

Note: Both Brocade data sheets list support for CEE which is a proprietary pre-standard implementation of DCB which is in the process of being standardized with some parts ratified by the IEEE and some pending. The terms do get used interchangeably so whether this is a typo or an actual implementation will be something to discuss with your Brocade account team during the design phase. Additionally Brocade specifically states use for Tier 3 and ‘some Tier 2’ applications which suggests a lack of confidence in the protocol and may suggest a lack of commitment to support and future products. (This is what I would read from it based on the data sheets and Brocade’s overall positioning on FCoE from the start.)

Cisco:

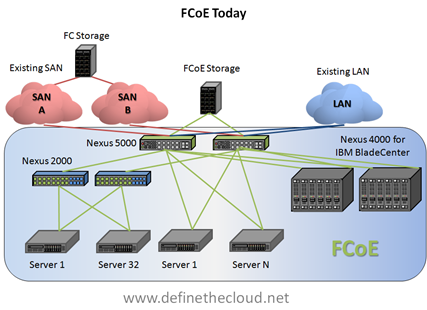

- Nexus 5000 – There are two versions of the Nexus 5000:

- 1RU 5010 with 20 10GE ports and 1 expansion module slot which can be used to add (6x 1/2/4/8G FC, 6x 10GE, 8x 1/2/4G FC, or 4x 1/2/4G FC and 4x 10GE)

- 2RU 5020 with 40 10GE ports and 2 expansion module slots which can be used to add (6x 1/2/4/8G FC, 6x 10GE, 8x 1/2/4G FC, or 4x 1/2/4G FC and 4x 10GE)

- Both can be purchased as HP OEM products.

- Nexus 7000 – There are two versions of the Nexus 7000 which are both core/aggregation Layer data center switches. The latest announced 32 x 1/10GE line card supports the DCB standards. Along with support for Cisco Fabric path based on pre-ratified TRILL standard.

Note: The Nexus 7000 currently only supports the DCB standard, not FCoE. FCoE support is planned for Q3CY10 and will allow for multi-hop consolidated fabrics.

Taking the noted considerations into account any of the above options will provide the infrastructure reduction shown in the diagram above for stand alone server solutions.

When we move into blade servers the options are different. This is because Blade Chassis have built in I/O components which are typically switches. Let’s look at the options for IBM and Dell then take a look at what HP and FlexFabric bring to the table for HP C-Class systems.

IBM:

- BNT Virtual Fabric 10G Switch Module – This module provides 1/10GE connectivity and will support FCoE within the chassis when paired with the Qlogic switch discussed below.

- Qlogic Virtual Fabric Extension Module – This module provides 6x 8GB FC ports and when paired with the BNT switch above will provide FCoE connectivity to CNA cards in the blades.

- Cisco Nexus 4000 – This module is an DCB switch providing FCoE frame delivery while enforcing DCB standards for proper FCoE handling. This device will need to be connected to an upstream Nexus 5000 for Fibre Channel Forwarder functionality. Using the Nexus 5000 in conjunction with one or more Nexus 4000s provides multi-hop FCoE for blade server deployments.

- IBM 10GE Pass-Through – This acts as a 1-to-1 pass-through for 10GE connectivity to IBM blades. Rather than providing switching functionality this device provides a single 10GE direct link for each blade. Using this device IBM blades can be connected via FCoE to any of the same solutions mentioned under standalone servers.

Note: Versions of the Nexus 4000 also exist for HP and Dell blades but have not been certified by the vendors, currently only IBM supports the device. Additionally the Nexus 4000 is a standards compliant DCB switch without FCF capabilities, this means that it provides the lossless delivery and bandwidth management required for FCoE frames along with FIP snooping for FC security on Ethernet networks, but does not handle functions such as encapsulation and de-encapsulation. This means that the Nexus 4000 can be used with any vendor FCoE forwarder (Nexus or Brocade currently) pending joint support from both companies.

Dell

- Dell 10GE Pass-Through – Like the IBM pass-through the Dell pass-through will allow connectivity from a blade to any of the rack mount solutions listed above.

Both Dell and IBM offer Pass-Through technology which will allow blades to be directly connected as a rack mount server would. IBM additionally offers two other options: using the Qlogic and BNT switches to provide FCoE capability to blades, and using the Nexus 4000 to provide FCoE to blades.Â

Let’s take a look at the HP options for FCoE capability and how they fit into the blade ecosystem.

HP:

- 10GE Pass-Through – HP also offers a 10GE pass-through providing the same functionality as both IBM and Dell.

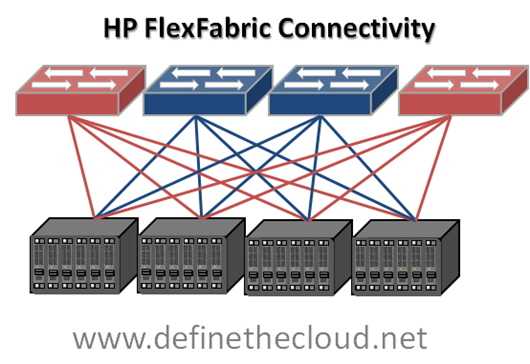

- HP FlexFabric – The FlexFabric switch is a Qlogic FCoE switch OEM’d by HP which provides a configurable combination of FC and 10GE ports upstream and FCoE connectivity across the chassis mid-plane. This solution only requires two switches for redundancy as opposed to four with FC and Ethernet configurations. Additionally this solution works with HP FlexConnect providing 4 logical server ports for each physical 10GE link on a blade, and is part of the VirtualConnect solution which reduces the management overhead of traditional blade systems through software.

On the surface FlexFabric sounds like the way to go with HP blades, and it very well may be, but let’s take a look at what it’s doing for our infrastructure/cable consolidation.

With the FlexFabric solution FCoE exists only within the chassis and is split to native FC and Ethernet moving up to the Access or Aggregation layer switches. This means that while reducing the number of required chassis switch components and blade I/O cards from four to two there has been no reduction in cabling. Additionally HP has no announced roadmap for a multi-hop FCoE device and their current offerings for ToR multi-hop are OEM Cisco or Brocade switches. Because the HP FlexFabric switch is a Qlogic switch this means any FC or FCoE implementation using FlexFabric connected to an existing SAN will be a mixed vendor SAN which can pose challenges with compatibility, feature/firmware disparity, and separate management models.

HP’s announcement to utilize the Emulex OneConnect adapter as the LAN on motherboard (LOM) adapter makes FlexFabric more attractive but the benefits of that LOM would also be recognized using the 10GE Pass-Through connected to a 3rd party FCoE switch, or a native Nexus 4000 in the chassis if HP were to approve and begin to OEM he product.

Summary:

As the title states FlexFabric is definitely a step in the right direction but it’s only a small one. It definitely shows FCoE commitment which is fantastic and should reduce the FCoE FUD flinging. The main limitation is the lack of cable reduction and the overall FCoE portfolio. For customers using, or planning to use VirtualConnect to reduce the management overhead of the traditional blade architecture this is a great solution to reduce chassis infrastructure. For other customers it would be prudent to seriously consider the benefits and drawbacks of the pass-through module connected to one of the HP OEM ToR FCoE switches.