Micro-segmentation: What, Why, How?

There’s a lot of buzz around the term micro-segmentation (uSeg) and I thought I’d take some time to demystify it, starting with some history. If you’re more of a visual learner skip to the end and check out the video.

uSeg has roots in ‘zero-trust model’ type of thinking and architectures. At the most basic level the idea is to greatly enhance security models based primarily on perimeter security implementations, like firewalls.

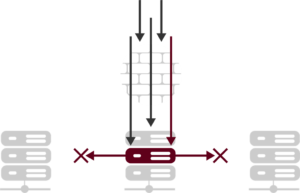

The reason for this is simple, if you rely solely on perimeter security you are completely exposed when (not if) the perimeter is breached. The graphic below depicts this.

In the graphic a single penetration of the firewall can lead to a comprised server or workload which then becomes the attacker with no security left to stop it.

Architectures attempting to enhance perimeter security have been implemented using firewalls as a funnel for all traffic, and VLAN Access Control Lists (VACL), among other similar techniques.

The failure of these attempts comes down to four things:

- Visibility: limited knowledge of what traffic can/can’t be blocked.

- Cost: firewall hardware, etc.

- Manageability: there’s no good way to manage that many distributed firewall rules or ACLs.

- Complexity: any way you slice it this is complex, and complexity kills agility while adding risk, cost, and reducing manageability.

Micro-segmentation spins the conversation back up in a new format. The reason it has created so much buzz is that the tools have caught up to the point where we can reduce, or eliminate the four problems above.

Technologies including big data, SDN, and advanced automation have matured enough to provide frameworks to accomplish granular segmentation at a micro, or even nano level (another term some use).

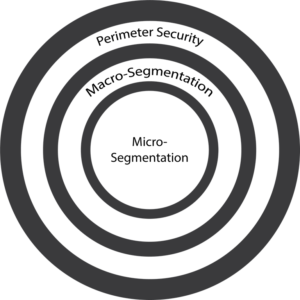

The advantage of this level of segmentation is depicted below. In the graphic a penetration of the perimeter security compromises a host or workload, but malicious traffic from that host is blocked by micro-segmentation zones. This prevents the attack from propagating further.

As the graphic depicts, micro-segmentation should not be looked at as a replacement for perimeter security, instead it is an enhancement. Micro-segmentation provides advanced security within the secure perimeter, and in some cases can simplify, not replace, the perimeter security architecture.

In many cases a 3rd layer of security is also implemented. This is a layer of ‘macro-segmentation.’ Macro-segmentation can be used as a starting point to micro-segmentation, deployed in conjunction, or ignored if not required.

The macro-segmentation layer provides segmentation between large static groups. Great examples are compliant vs. non-compliant, and development life-cycles (dev, test, prod, etc.)

Macro-segments can be deployed in a much wider variety of devices due to the reduced need for granularity and change. Typically macro-segmentation is deployed using Software Defined Networking (SDN) solutions.

The two primary requirements for macro-segments are broad scope, and limited change rate. The reason for this is the broader number of solutions it will deployed in. In general the more granular the scope, or the higher the change rate, the more automated the platform will need to be.

In the next graphic we see the three layers of security operating together. Each layer expands on the last becoming more granular and enhancing protection.

Micro-segmentation is the most granular of the three layers, and there are many options for how to address these segments. Micro-segments can be built around workloads (Server, VM, Container), applications (www.onisick.com, WordPress, Oracle), or traffic flows themselves (TCP X and UDP Y to IP Z). The best workload protection tools in this space offer the ability to do all three.

The ability to use various segmentation methods in parallel is important. Every environment will have different security needs. More so, within every environment different applications/data/workloads will have different needs. Having options allows you to fine-tune cost, time-to-deploy, and security risk accordingly.

The most critical thing to account for as you deploy granular segmentation will be change rate. Many tools can enforce micro-segments, very few can handle authorized change at a rate that doesn’t impact business agility.

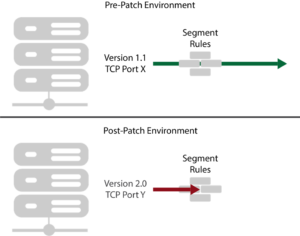

Connectivity in a data center tends to change rapidly, static non-automated, micro-segmentation will quickly create outages based on authorized change. A great example of this is software patching.

Software patches often modify the TCP/UDP port(s) used by the application or operating system (OS). If this occurs in an environment where micro-segmentation is tightly deployed, that port change can cause outages.

The old port remains open while the new, required, port is blocked by now-outdated segmentation. Manual remediation processes for this type of thing take 48-72 hours. That will not be nearly fast enough in a micro-segmented world. This is shown below.

Micro-segmentation is a security architecture that should be explored and assessed by organizations of all sizes and types. The level of granularity required, speed-to-deploy, etc. will vary.

To take another view on this topic check out the video below that I produced on the topic.