We Live in a Multi-Cloud World: Here's Why

It's almost 2019 and there's still a lot of chatter, specifically from hardware vendors, that 'We're moving to a multi-cloud world. This is highly erroneous. When you hear someone say things like that, what they mean is 'we're catching up to the rest of the world and trying to sell a product in the space.'

Multi-cloud is a reality, and it's here today. Companies are deploying applications on-premises, using traditional IT stacks, automated stacks, IaaS, and private-cloud infrastructure. They are simultaneously using more than one public cloud resources. If you truly believe that your company, or company x is not operating in a multi-cloud fashion start asking the lines of business. The odds are you'll be surprised.

Most of the world has moved past the public-cloud vs. private-cloud debate. We realized that there are far more use-cases for hybrid clouds than the original asinine idea of 'cloud-bursting' which I ranted about for years (http://www.definethecloud.net/the-reality-of-cloud-bursting/Â and https://www.networkcomputing.com/cloud-infrastructure/hybrid-clouds-burst-bubble/2082848167.) After the arguing, vendor nay saying, and general moronics slowed down we started to see that specific applications made more sense in specific environments, for specific customers. Imagine that, we came full-circle to the only answer that ever applies in technology: it depends.

There are many factors that would come into play when deciding where to deploy or build an application (specifically which public or private resource, and which deployment model (IaaS, PaaS, SaaS, etc.) The following is not intended to be an exhaustive list:

- Application maturity and deployment model

- Data requirements (type, structure, locality, latency, etc.)

- Security requirements and organizational security maturity. Note: in general, public cloud is no more, or less secure than private. Security is always the responsibility of the teams developing and supporting the application and can be effectively achieved regardless of infrastructure location.

- Scale (general size, elasticity requirements, etc.)

- Licensing requirements/restrictions

- Hard support restrictions. Some examples include: requires bare-metal deployment, Fibre Channel storage, specific hardware in the form of an appliance, magic elves who shit rainbows.

- Cost, both how much will it cost on any given environment and what type of costs are most beneficial to your business (capital vs. operational expenses, etc.)

- Governance, compliance, regulatory concerns.

Lastly, don't discount peoples technology religions. There is typically more than one way to skin a cat, so it's not often worth it to fight an uphill battle against an entrenched opinion. Personally when I'm working with my customers if I start to sense a 'religious' stance to a technology or vendor I assess whether a more palatable option can fit the same need. Only when the answer is no do I push the issue. I believe I've discussed that in this post: http://www.definethecloud.net/why-cisco-ucs-is-my-a-game-server-architecture/.

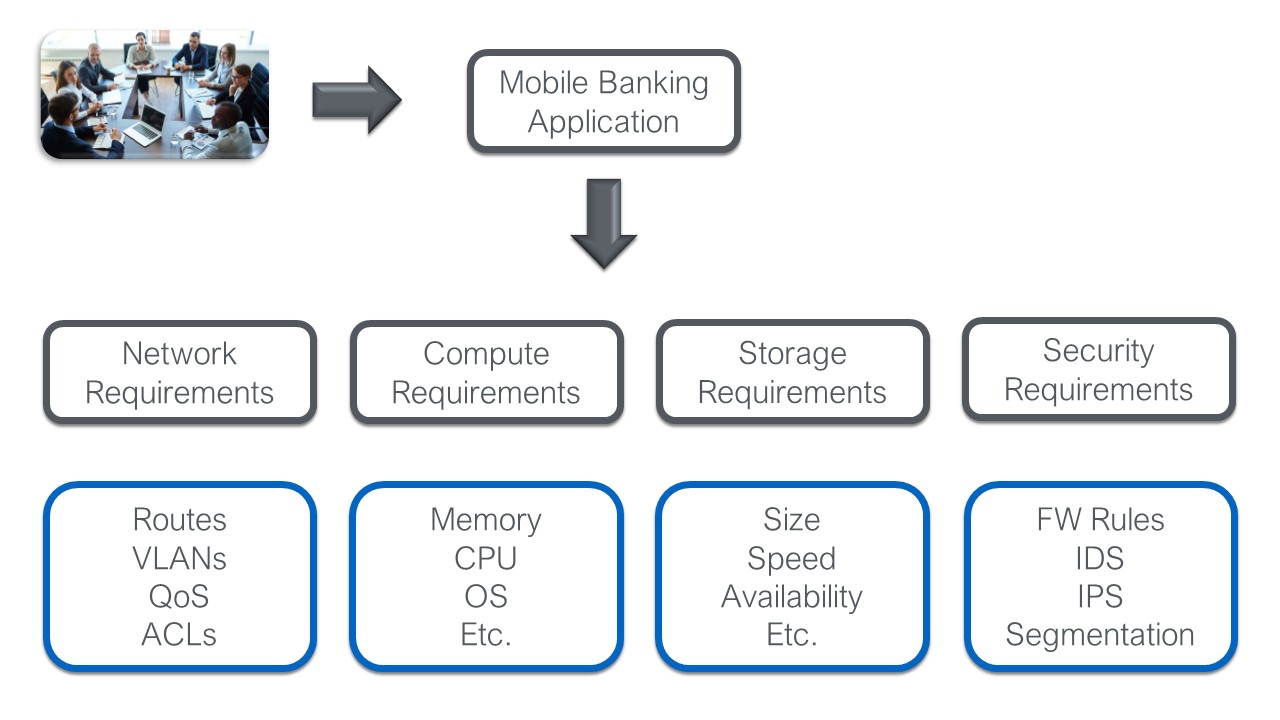

The benefits of multi-cloud models are wide, and varied, and like anything else, they come with drawbacks. The primary benefit I focus on is the ability to put the application in focus. With traditional on-premises architectures we are forced to define, design, and deploy our application stack based on infrastructure constraints. This is never beneficial to the success of our applications, or our ability to deploy and change them rapidly.

When we move to a multi-cloud world we can start by defining the app we need, draw from that it's requirements, and finally use those requirements to decide which infrastructure options are most suited to them. Sure I can purchase/build CRM, or expense software, deploy them in my data center or my cloud provider's but I can also simply use the applications as a service. In a multi-cloud world I have all of those options available after defining the business requirements of the application.

Here's two additional benefits that have made multi-cloud today's reality. I'm sure there are others so help me out in the comments:

Cloud portability:

Even if you only intend to use one public cloud resource, and only in an IaaS model building for portability can save you pain in the long run. Let history teach you this lesson. You built your existing apps assuming you'd always run apps from your own infrastructure. Now you're struggling with the cost and complexity of moving them to cloud, might history repeat itself? Remember that cost models and features change with time, this means it may be attractive down the road to switch from cloud a to cloud b. If you neglect to design for this up-front, the pain will be exponentially greater down the road.

Note: This doesn't mean you need to go all wild-west with this shit. You can select a small set of public and private services such as IaaS and add them to a well-defined service-catalogue. It's really not that far off from what we've traditionally done within large on-premises IT organizations for years.

Picking the right tool for the job:

Like competitors in any industry, public clouds attempt to differentiate from one another to win your business. This differentiation comes in many forms: cost, complexity, unique feature-set, security, platform integration, openness, etc. The requirements for an individual app, within any unique company, will place more emphasis on one or more of these. In a multi-cloud deployment those requirements can be used to decide the right cloud, public or private, to use. Simply saying 'We're moving everything to cloud x' is placing you right back into the same situation where your infrastructure dictates your applications.

As I stated early on, multi-cloud doesn't come without it's challenges. One of the more challenging parts is that the tools to alleviate these challenges are, for the most part, in their infancy. The three most common challenges are: holistic visibility (cost, performance, security, compliance, etc.), administrative manageability, and policy/intent consistency especially as it pertains to security.

Visibility:

We've always had visibility challenges when operating IT environments. Almost no one can tell you exactly how many applications they have, or hell, even define what an 'application' is to them. Is it the front-end? Is it the three tiers of the web-app? What about the dependencies, is Active-Directory an app or a service? Oh shit, what's the difference between an app or a service? Because this is already a challenge within the data center walls, and across on-premises infrastructure, it gets exacerbated as we move to a multi-cloud model. More tools are emerging in this space, but be wary as most promise far more than they deliver. Remember not to set your expectations higher than needed. For example if you can find a tool that simply shows you all your apps across the multi-cloud deployment from one portal, you're probably better off than you were before.

Manageability:

Every cloud is unique in how it operates, is managed, and how applications are written to it. For the most part they all use their own proprietary APIs to deploy applications, provide their own dashboards and visibility tools, etc. This means that each additional cloud you use will add some additional overhead and complexity, typically in an exponential fashion. The solution is to be selective in which private and public resources you use, and add to that only when the business and technical benefits outweigh the costs.

Tools exist to assist in this multi-cloud management category, but none that are simply amazing. Without ranting too much on sepcfic options, the typical issues you'll see with these tools are they oversimplify things dumbing down the underlying infrastructure and negating the advantages underneath, they require far too much customization and software development upkeep, and they lack critical features or vendor support that would be needed.

Intent Consistency:

Policy, or intent can be described as SLAs, user-experience, up-time, security, compliance and risk requirements. These are all things we're familiar with supporting and caring for on our existing infrastructure. As we expand into multi-cloud we find the tools for intent enforcement are all very disparate, even if the end result is the same. I draw an analogy to my woodworking. When joining two pieces of wood there are several joint options to choose from. The type of joint will narrow the selection down, but typically leave more than one viable option to get the desired result. Depending on the joint I'm working on, I must know the available options, and pick my preference of the ones that will work for that application.

Each public or private infrastructure generally provides the tools to acheive an equivelant level of intent enforcement (joints), but they each offer different tools for the job (joinery options.) This means that if you stretch an application or its components across clouds, or move it from one to the other, you'll be stuck defining it's intent multiple times.

This category offers the most hope, in that an overarching industry architecture is being adopted to solve it. This is known as intent driven architecture, which I've described in a three part series starting here: http://www.definethecloud.net/intent-driven-architectures-wtf-is-intent/. The quick and dirty description is that 'Intent Driven' is analogous to the park button appearing in many cars. I push park, and the car is responsible for deciding if the space is parallel, pull-through, or pull-in, then deciding the required maneuvers to park me. With intent driven deployments I say park the app with my compliance accounted for, and the system is responsible for the specifics of the parking space (infrastructure). Many vendors are working towards products in this category, and many can work in very heterogeneous environments. While it's still in it's infancy it has the most potential today. The beauty of intent driven methodologies is that while alleviating policy inconsistency they also help with manageability and visibility.

Overall, multi-cloud is here, and it should be. There are of course companies that deploy holistically on-premises, or holistically in one chosen public cloud, but in today's world these are more corner case than the norm, especially with more established companies.

For another perspective check out this excellent blog article I was pointed to by Dmitri Kalintsev (@dkalintsev)Â https://bravenewgeek.com/multi-cloud-is-a-trap/. I very much agree with much, if not all of what he has to say. His article is focused primarily on running an individual app, or service across multiple clouds, where I'm positioning different cloud options for different workloads.